Emotion Detection with Convolutional Neural Networks

Emotion Detection with Convolutional Neural Networks

Why?

To explore machine learning techniques for image classification

What?

OpenCV handles face detection. A CNN trained on FER2013 handles emotion classification. Chain them together for live video analysis.

Code

Setup data

For training, we are using the fer2013 black and white emotion-labelled faces dataset. The raw data is available on Kaggle here.

We load our data and apply transformations using the ImageDataGenerator class. Applying small transformations to our data prevents overfitting which helps when making predictions on new data.

from tensorflow.keras.preprocessing.image import ImageDataGenerator

# Source: https://www.kaggle.com/datasets/msambare/fer2013

train_dir = "../data/Project4/images/train/"

test_dir = "../data/Project4/images/test/"

# Define image parameters

IMG_HEIGHT = 48

IMG_WIDTH = 48

BATCH_SIZE = 1024

NUM_CLASSES = 7 # angry, disgust, fear, happy, neutral, sad, surprise

# Data augmentation and normalization for training (avoid overfitting)

train_datagen = ImageDataGenerator(

rescale=1./255, # Normalize pixel values between 0 and 1

rotation_range=30, # Randomly rotate images by up to 30 degrees

width_shift_range=0.2,# Randomly shift images horizontally

height_shift_range=0.2,# Randomly shift images vertically

shear_range=0.2, # Shear transformations

zoom_range=0.2, # Zoom in/out on images

horizontal_flip=True, # Randomly flip images horizontally

fill_mode='nearest' # Fill in missing pixels after transformations

)

# Only normalization for testing

test_datagen = ImageDataGenerator(rescale=1./255)

# Load and iterate training dataset

train_generator = train_datagen.flow_from_directory(

train_dir,

target_size=(IMG_HEIGHT, IMG_WIDTH),

color_mode='grayscale', # FER-2013 images are grayscale

batch_size=BATCH_SIZE,

class_mode='categorical', # For multi-class classification

shuffle=True

)

# Load and iterate test dataset

test_generator = test_datagen.flow_from_directory(

test_dir,

target_size=(IMG_HEIGHT, IMG_WIDTH),

color_mode='grayscale',

batch_size=BATCH_SIZE,

class_mode='categorical',

shuffle=False

)

Train our model

Next, we will setup a convolutional neural network (CNN) to predict emotions for each image. A CNN is good for this task because it spatially breaks the image into parts, and those parts into smaller parts and so on. Doing so gives us nodes of information that capture the relationship between spatially similar objects. This gives our neural network enough information to make good predictions.

Side note: CNNs have historically dominated image processing tasks, but recently vision transformer (ViT) models are on the rise and may outperform CNNs in some areas.

import os

import numpy as np

import matplotlib.pyplot as plt

import tensorflow as tf

model = tf.keras.models.Sequential([

tf.keras.layers.Conv2D(32, (3, 3), activation='relu', input_shape=(IMG_HEIGHT, IMG_WIDTH, 1)),

tf.keras.layers.Conv2D(32, (3, 3), activation='relu'),

tf.keras.layers.MaxPooling2D(2, 2),

tf.keras.layers.BatchNormalization(),

tf.keras.layers.Conv2D(64, (3, 3), activation='relu'),

tf.keras.layers.Conv2D(64, (3, 3), activation='relu'),

tf.keras.layers.MaxPooling2D(2, 2),

tf.keras.layers.BatchNormalization(),

tf.keras.layers.Conv2D(128, (3, 3), activation='relu'),

tf.keras.layers.Conv2D(128, (3, 3), activation='relu'),

tf.keras.layers.MaxPooling2D(2, 2),

tf.keras.layers.BatchNormalization(),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(512, activation='relu'),

tf.keras.layers.Dropout(0.5),

tf.keras.layers.BatchNormalization(),

tf.keras.layers.Dense(NUM_CLASSES, activation='softmax')

])

# Compile the model

model.compile(

optimizer='adam',

loss='categorical_crossentropy',

metrics=['accuracy']

)

# Print the model summary

model.summary()

# Train the model

EPOCHS = 50

history = model.fit(

train_generator,

epochs=EPOCHS,

validation_data=test_generator,

)

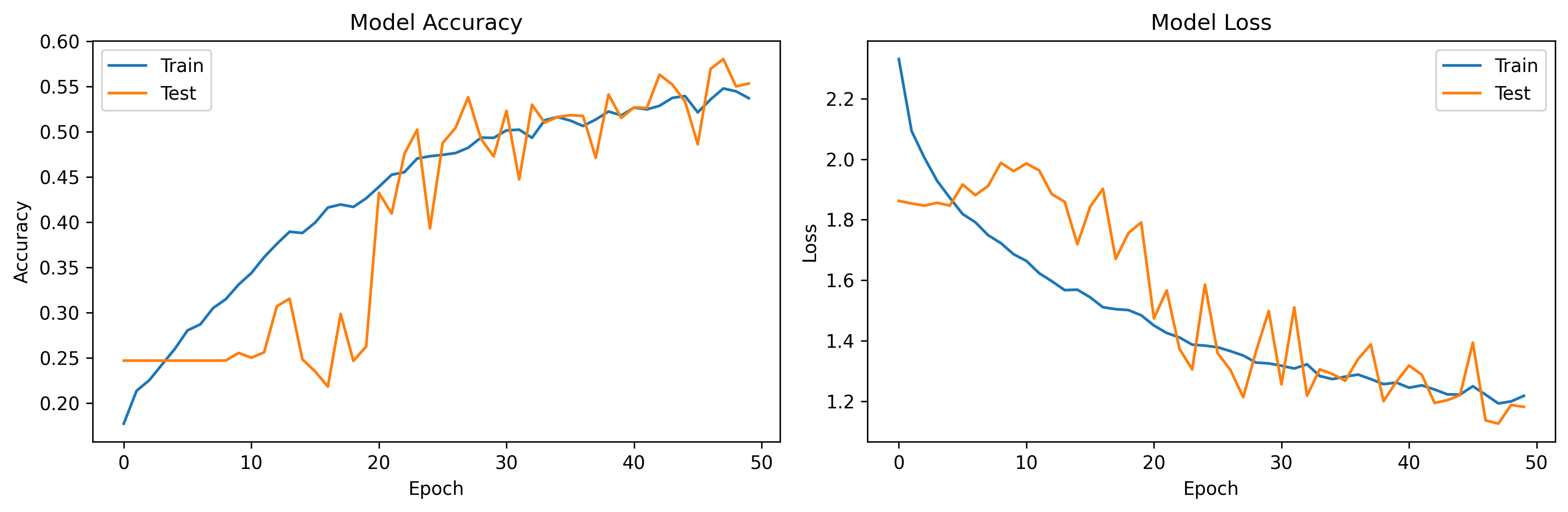

Visualize Training Results

Now we’ll look through some training metrics to get an idea of our model’s ability.

# Evaluate the model on the test set

test_loss, test_acc = model.evaluate(test_generator)

print(f"\nTest Accuracy: {test_acc*100:.2f}%")

# Plot training & validation accuracy values

plt.figure(figsize=(12, 4))

plt.subplot(1, 2, 1)

plt.plot(history.history['accuracy'], label='Train')

plt.plot(history.history['val_accuracy'], label='Test')

plt.title('Model Accuracy')

plt.xlabel('Epoch')

plt.ylabel('Accuracy')

plt.legend()

# Plot training & validation loss values

plt.subplot(1, 2, 2)

plt.plot(history.history['loss'], label='Train')

plt.plot(history.history['val_loss'], label='Test')

plt.title('Model Loss')

plt.xlabel('Epoch')

plt.ylabel('Loss')

plt.legend()

plt.tight_layout()

plt.show()

The accuracy plateau around epoch 30 is expected (Train accuracy ~55%). FER2013 has labeling inconsistencies and low image quality. Pushing for higher accuracy means overfitting to dataset noise. This performance level generalizes better to real faces.

Setup live face recognition and classification

Finally, we will setup live video, extract faces using cv2 (surprisingly simple!), and label these faces using our CNN. Note that we scale our live images to match the dimensions of our training dataset (48x48 and black-and-white). This scaling results in a lightweight model while sacrificing some labelling accuracy.

# Live emotion classification

import cv2

import numpy as np

import tensorflow as tf

from tensorflow.keras.models import load_model

# Save and/or load the model

model.save('emotion_model.keras')

model = load_model('emotion_model.keras')

# Emotion classes corresponding to your model's output

emotion_classes = ['Angry', 'Disgust', 'Fear', 'Happy', 'Neutral', 'Sad', 'Surprise']

# Start video capture from the default webcam

cap = cv2.VideoCapture(0)

# Load OpenCV's pre-trained Haar Cascade face detector

face_cascade = cv2.CascadeClassifier(cv2.data.haarcascades + 'haarcascade_frontalface_default.xml')

while True:

# Capture frame-by-frame

ret, frame = cap.read()

if not ret:

break # Exit if the video capture fails

# Convert the frame to grayscale (since your model expects grayscale images)

gray_frame = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

# Detect faces in the frame

faces = face_cascade.detectMultiScale(

gray_frame,

scaleFactor=1.1,

minNeighbors=5,

minSize=(30, 30),

flags=cv2.CASCADE_SCALE_IMAGE

)

# Iterate over each face detected

for (x, y, w, h) in faces:

# Extract the region of interest (the face)

roi_gray = gray_frame[y:y+h, x:x+w]

# Resize the face ROI to match the input shape of your model (48x48)

cropped_img = cv2.resize(roi_gray, (48, 48))

# Normalize pixel values (as done during training)

cropped_img = cropped_img.astype('float32') / 255.0

# Expand dimensions to match model input shape (1, 48, 48, 1)

cropped_img = np.expand_dims(cropped_img, axis=0)

cropped_img = np.expand_dims(cropped_img, axis=-1)

# Predict the emotion

prediction = model.predict(cropped_img)

emotion_index = np.argmax(prediction)

emotion_label = emotion_classes[emotion_index]

confidence = np.max(prediction)

# Draw a rectangle around the face

cv2.rectangle(frame, (x, y), (x+w, y+h), (0, 255, 0), 2)

# Put the emotion label and confidence on the frame

cv2.putText(frame, f"{emotion_label} ({confidence*100:.1f}%)", (x, y - 10),

cv2.FONT_HERSHEY_SIMPLEX, 0.9, (255, 255, 255), 2)

# Display the resulting frame

cv2.imshow('Real-Time Emotion Recognition', frame)

# Break the loop when 'q' key is pressed

if cv2.waitKey(1) & 0xFF == ord('q'):

break

# When everything is done, release the capture and close windows

cap.release()

cv2.destroyAllWindows()

Demo

When we put it all together, here’s what it looks like (prepare to see me making goofy faces into my camera):

Conclusions

Test accuracy: ~55% on FER2013 validation set. Real-world performance is harder to quantify but handles varying lighting and partial occlusion reasonably well. For me, disgust is the hardest emotion to classify, but individual expressiveness can impact the model’s ability to classify an emotion. Training on colored pictures with higher quality could improve our accuracy rate, though it could introduce performance challenges in live video.